Aravind Srinivas, who leads Perplexity AI, has recently drawn attention to a fault line running through the modern technology industry. In a brief clip shared on X at the opening of 2026, he argues that the greatest challenge facing today’s expanding data centers may not be competition or cost, but a quieter shift toward artificial intelligence that lives and works directly on personal devices.

Why Today’s AI Still Depends on Massive Infrastructure

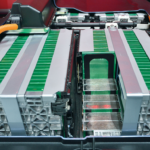

His claim becomes clearer when one looks at the ordinary workings of AI as they exist now. Most interactions with artificial intelligence like asking a question, producing an image, or condensing a long text are not handled on the machine in front of the user. Instead, the request is sent onward to remote installations, vast buildings filled with specialized chips.

These places consume power, require continuous maintenance, and are such an investment that only a handful of companies can afford. They are needed as the existing AI systems are massive and consume power that is way beyond the capability of a phone or a laptop. That is why businesses like Meta, Google, and OpenAI are still relying on such centers and why, at least in the short term, the hardware of AI still remains far away from the hands of those who operate it.

A Future Where Intelligence Fits Inside Your Device

Aravind Srinivas states that such an arrangement is not permanent. To him, the balance may change when artificial intelligence becomes slim enough to fit in the entirety of the machinery that people already carry. When a model can be scaled down to the size of a single chip within a phone or a computer, there would be no need to send one’s questions and personal material to remote servers. His argument, which he makes without pomp, is that the day when intelligence can be locally stored on a device is the day when great data centers will start to become less significant.

Speed, Privacy, and the Case for Local Processing

He continues to explain the practical implications of such a change. Working intelligence on the device would be faster, as it would no longer have to wait as signals pass over networks. It would be less conspicuous as well. The personal information would not be duplicated and kept in another location but rather left where it was created. To bring the concept to life, he compares local AI to a personal mind that one has at any given time that gets shaped through everyday use and learns silently through the owner and not through the crowd.

How AI Could Learn Directly From Individual Habits

The concept of what he terms test-time training is also brought up by Aravind Srinivas. By this he refers to a system that monitors the habits that a person repeats on his or her own machine such as adjusting pictures, writing messages, organizing presentations, or sorting files and gradually becomes accustomed to these habits. Eventually, this system would start performing tasks that are familiar to it without the necessity of relaying information to other places.

In this incarnation, he postulates, artificial intelligence ceases to seem like a distinct tool that one consults, and begins to appear more like an internal organ, a presence that evolves with its user. It is not leased, but owned. It is your own mind, as he puts it.

Are Massive Data Center Bets Becoming a Liability?

He does not deny that cloud-based intelligence still has its uses. What he questions is the wisdom of pouring extraordinary sums into ever-larger centers of computation if comparable intelligence can live at the edge, inside ordinary devices. At present, the largest technology firms are wagering hundreds of billions, even trillions of rupees, on vast buildings crowded with processors in the hope of securing dominance. Yet if locally run AI advances as quickly as he expects, Srinivas argues that this logic weakens. In such a world, the concentration of intelligence in distant places would appear less a necessity than a costly habit carried over from an earlier stage.

Final Words

The tech giants may be constructing monuments to their own obsolescence, in case Srinivas is right. Naturally, there is a dismal history of predictions concerning the future of technology. Do you remember the time we were all meant to be getting to work by jetpack by this time? Nevertheless, Srinivas has something attractive about his vision, an AI that is familiar with you as an old friend, fits in your pocket, and does not need to beam your grocery lists to a distant server farm. It remains to be seen whether his prediction will come to pass or not.