Understanding word usage and identifying duplicate words are essential in various fields, including natural language processing, information retrieval, and data analysis. Term frequency (TF) is a crucial metric that helps quantify the importance of terms within a text. The following article will delve into techniques for calculating term frequency, explore different approaches to analyzing word usage, and discuss methods to identify and handle duplicate words effectively. But first, What Is Term Frequency?

What Is It?

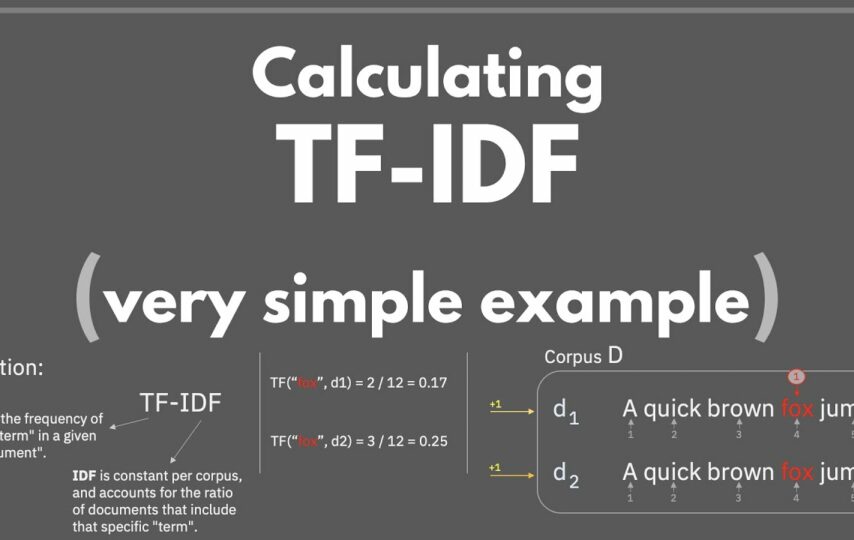

Term frequency represents the frequency of a word’s occurrence within a given text or document. By calculating TF, folks gain insights into words’ importance and relevance to the overall context. TF forms the basis for numerous text mining and information retrieval techniques, including TF-IDF (Term Frequency-Inverse Document Frequency).

- Basic Term Frequency Calculation: To calculate this, fellows divide the number of times a specific expression pops up in a document by the total number of expressions. The resulting value measures how frequently the expression occurs relative to the other expressions in the document.

- Normalized Term Frequency: This accounts for variations in document length. Instead of using raw frequencies, fellows divide the number of occurrences of a word by the total number of terms in the document. This normalization prevents longer documents from having higher term frequencies simply due to their length.

Analyzing Word Usage

Analyzing word usage involves examining the frequency of words across multiple documents or within a single document. This process helps folks identify important terms, discover patterns, and gain insights into the underlying meaning of the text. Here are two common techniques for analyzing expression usage:

- Word Frequency Distribution: It provides a histogram or bar graph representation of expression frequencies within a text corpus. It highlights the most frequent expressions and helps identify the important terms within a collection of documents.

- Word Clouds: These visually depict word frequencies, with more frequent words appearing larger in size. They offer a quick overview of the most prominent terms in a document or a corpus, aiding in understanding the main themes and topics.

Identifying Duplicate Terms

Duplicate terms can introduce noise and hinder accurate analysis. Detecting and handling them is crucial to maintain data integrity. Consider the following techniques for identifying and addressing duplicate terms:

a. Tokenization and Lemmatization Tokenization breaks down text into individual words or tokens, while lemmatization reduces words to their base or root form. Applying these techniques helps identify duplicate expressions more effectively by standardizing term representation.

b. Removing Stop Words: These are standard terms that carry little meaning and frequently occur in texts (e.g., “the,” “and,” “is”). Removing them helps eliminate duplicates that may arise due to these ubiquitous terms.

c. Using Sets or Dictionaries: Using sets or dictionaries efficiently identifies and eliminates duplicate expressions. Sets only retain unique elements, making comparing and eliminating duplicates easy. Dictionaries allow people to track the frequency of each expression while identifying duplicates.

d. Taking the Help of Online Tools: Online tools offer efficient solutions for finding duplicate words in your writing, streamlining the editing process, improving clarity, and enhancing the overall quality of your work.

Conclusion

Before calculating TF and analyzing word usage, one must find the answer to the query: What Is Term Frequency? Only after this can one thoroughly understand how to calculate term frequency and employ various methods to identify and handle duplicate expressions, enhancing the textual analysis’s accuracy and reliability. These techniques find applications in numerous fields, from search engines and information retrieval systems to sentiment analysis and content classification, aiding in better understanding and leveraging textual data.